Just to share a test were I wanted to push Query Tool to high limits with CSV stuff.

I have a csv file with 9,5 million lines containing all Dutch postal adresses and PostCodes. This file weighs a lightweight of 2,5 GB.

I wanted to csv-parse it with Query Tool, then upload it to SQL Server, because SSMS import tool is not really wanting to help in importing that file.

I first did some test on a reduced csv file, then tried the full thing.

select c.*

from read_file_text('C:\mydir\myfile.csv')@os fle

join csvtable

( passing fle.file_contents

column delimiter ';'

skip lines 1

columns PostcodeNummers int position 1

, PostcodeLetters text position 2

, PostcodeVolledig varchar2 position 3

, Huisnummer varchar2 position 4

, HuisnummerLetter varchar2 position 5

, HuisnummerToevoeging varchar2 position 6

, Straat varchar2 position 7

, Plaats varchar2 position 8

, Gemeente varchar2 position 9

, Provincie varchar2 position 10

, Doel varchar2 position 11

, Oppervlakte varchar2 position 12

, VerblijfsStatus varchar2 position 13

, PandStatus varchar2 position 14

, BouwJaar year position 15

, Latitude varchar2 position 16

, Longitude varchar2 position 17

, XCoordinaat decimal position 18

, YCoordinaat decimal position 19

, CreatieDatum varchar2 position 20

, NevenVestiging varchar2 position 21

, ObjectID number position 22

) c

limit 100

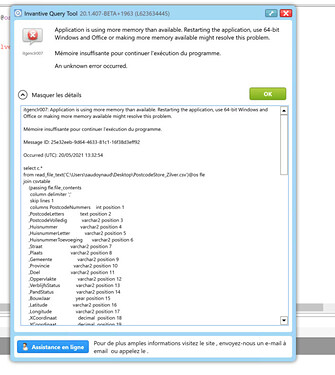

On the full set, the Query Tool drained all the memory and raised an OutOfMemoryException:

Obviously the limit 100 is not applied until after full processing.

This is just to share, non blocking. I gave been able to use Excel + Power Query to do my work in selecting some part of the post codes.