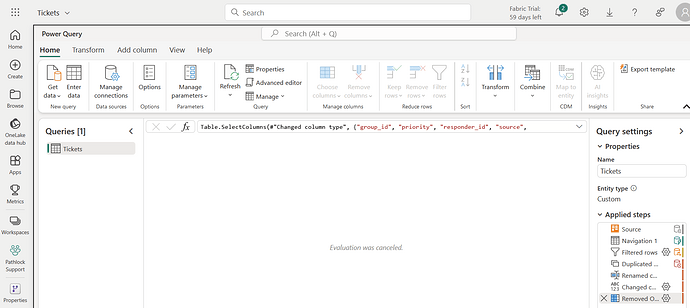

Using delegation, the following SQL was executed on the UniversalSQL editor on Invantive Cloud using an empty cache:

set use-http-disk-cache false

set use-http-memory-cache false

select count(*)

from tickets_incremental

Last query returned 64460 rows. Execution time was 615 seconds.

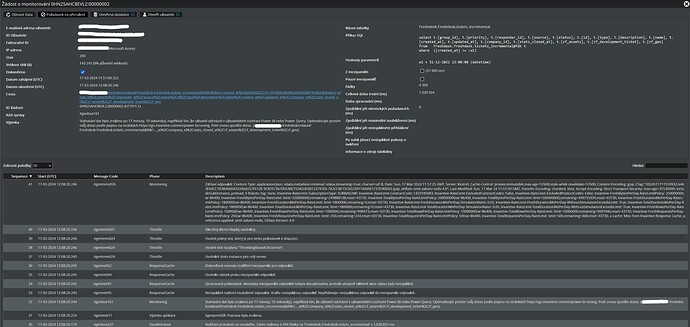

Execution took 1652 API calls. Of these were 2 API calls to get the spam tickets, and 2 API calls to get the deleted tickets. The other 1648 API calls were calls to retrieve tickets updated since a specific date, including statistics. Since each of these 1652 also includes the statistics, the total number of API credits used will be 3304.

An optimization will be included in 24.0.100 to remove the unneeded collections of statistics from spam and deleted tickets. This saves 4 API credits in this scenario.

Using 1648 API calls with each at most 100 rows to retrieve 64460 rows is not efficient. This indicates that only 40% of the maximum payload is effectively used. A percentage of 100 is not always realistic achievable given the limitations of the Freshdesk API protocol, but it should be closer. An analysis will be made to study possibilities to reduce the number of API calls needed.

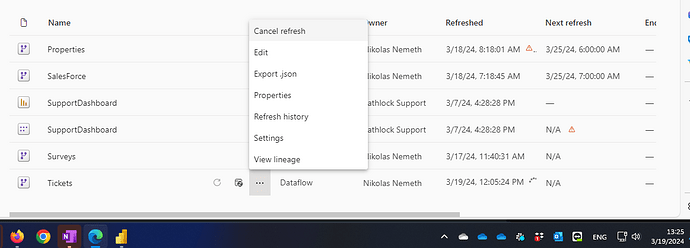

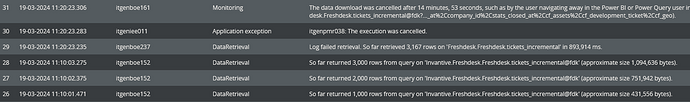

The last query was then re-run using a filled incremental cache. The execution time was 33 seconds. The query consumed 826 API calls, which is way too much. Each API call retrieved data one second apart. This might be related to the previous found ineffiency. Together with the previous problem this will be studied further.

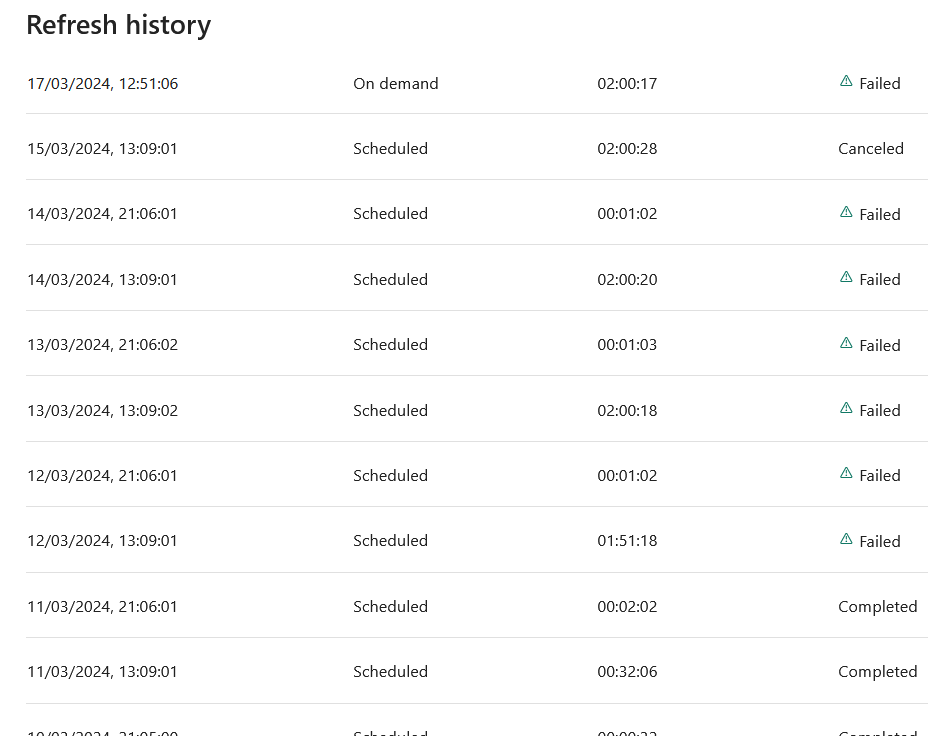

From the information it seems that the problem started occurring when a change on surveys for Freshdesk was taken into production.

From the looks of it, a bug was introduced and depending on the data volume, Freshdesk Plan and hourly/minutely rate limits being applied it will surface.

Once the analysis is done, a reply will be added.

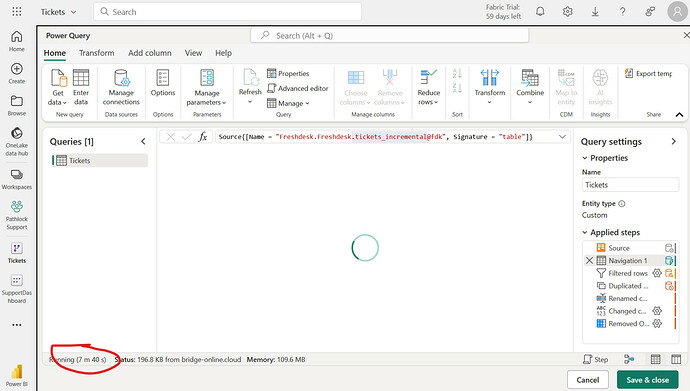

Background 429 errors and Extremely Slow Response / Spinning

The slow response and 429 error indicate that the API rate limit for API calls on Freshdesk was exceeded (see https://developer.freshdesk.com/api/#ratelimit). The rate limits on Freshdesk vary widely. Depending on the plan there is a limit on the number of calls per minute.

It is weird that the error contains the following text:

You have exceeded the limit of requests per hour.

This indicates that an hourly rate limit is applied instead of the limit per minute. In the past Freshdesk limited the number of calls per hour instead of per hour. To switch to the new minute level rate limits, it is recommended to contact support@freshdesk.com. Typically these were between 3.000 and 5.000 (Blossom/ Garden 3000, Estate/Forest 5.000).

The Freshdesk driver also supports the hourly rate limits, but the technical features provided by those are more limited.

This might also explain the spinning. When the hourly limit is exceeded, a 429 error is thrown. In that case the Freshdesk driver will delay some (increasing and somewhat random) time and try again to execute the API call. With minutely limits and even with competing processes, this will typically succeed in at most a few tries.

However, with hourly rate limits, the retry mechanism will try again for 10 times by default, each time with a longer delay. This is configurable through connection string keys such as download-error-429-too-many-requests-max-tries (see SQL-Driverattributen voor Freshdesk API - Freshdesk API Data Model).

It is recommended to switch to the minute level rate limit. This provides new calls every minute instead of once per hour (which might cause a download to spin for one hour).

Please reach out to Freshdesk to have your plan switched after analyzing possible impact.