Tests through UniversalSQL

Tested on UniversalSQL editor.

Number of divisions is 31:

select count(*)

from systemdivisions@eol

Number of rows is 2.128.834 (864 seconds, initial load):

select count(*)

from transactionlinesincremental@eol

Number of rows per division took 45 seconds (incremental load):

select division

, count(*)

from transactionlinesincremental@eol

group

by division

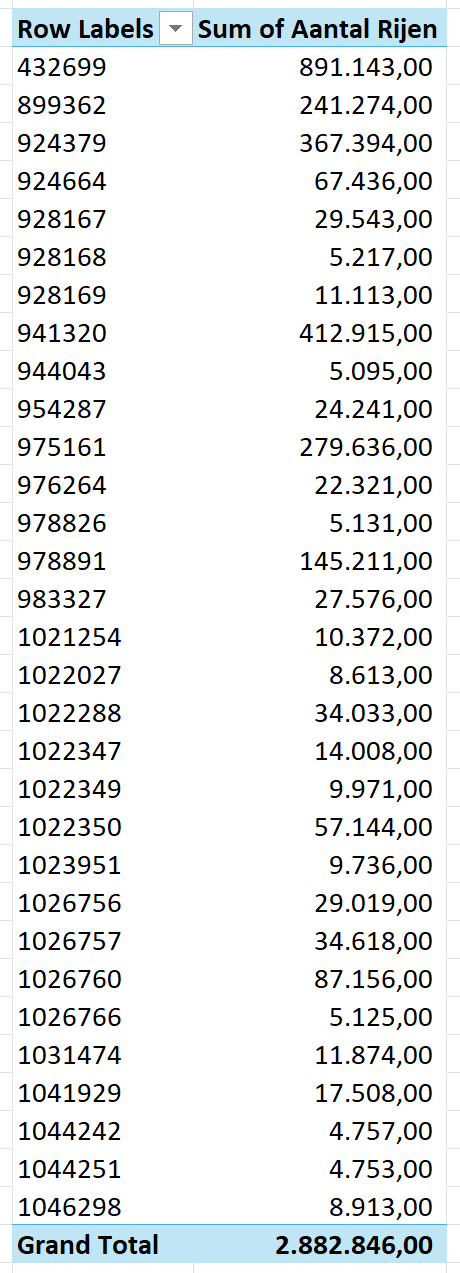

29 companies, largest company has 692640 rows.

Strange is that 1044242 and 1044251 have no rows in SQL editor and 1046298 has 3803 rows instead of 8913. There are also other differences in number of rows.

Checked using the following queries, but number of rows remains 0, even when using other APIs:

use 1044251@eol

select count(*) from transactionlinesbulk@eol

select count(*) from transactionlines@eol

It seems that the fast approach to count rows in TransactionLines does not work reliable (see Estimate size of an Exact Online company). The approach used by Get My Report as described in the linked article has been tested. However, also in this case the number of rows is 0 for 1044251:

create or replace table countqueries@inmemorystorage

as

select ste.name

, sdn.label

, sdn.code

, replace(ODATA_SERVICE_URL_FOR_SELECT, '{division}', sdn.code) || '/$count'

url

, ste.name || ' @ ' || sdn.label

orig_system_group

from SystemDivisions@eol sdn

join SYSTEMTABLES@DataDictionary ste

on ste.PROVIDER_NAME = 'ExactOnlineAll'

and ste.CATALOG = 'ExactOnlineREST'

and ste.schema = 'Sync'

and ste.odata_service_url_for_select like '%TransactionLines%'

and code = 1044251

--

-- Send the API requests untranslated as a

-- "Native Scalar Request". Results will be registered in

-- the same table "NativePlatformScalarRequest".

--

-- Use is made of XML-output. JSON can be acquired by

-- setting Content-Type.

--

insert into exactonlinerest..nativeplatformscalarrequests@eol

( url

, orig_system_group

, fail_on_error

)

select url

, orig_system_group

--

-- Replace by true if you don't have permissions on everything.

-- Tables which the current Exact Online user does not have permissions to

-- will then be silently skipped.

--

, false

from COUNTQUERIES@InMemoryStorage

--

-- Per table and company the number of rows.

--

select orig_system_group

, RESULT_TEXT

from exactonlinerest..NATIVEPLATFORMSCALARREQUESTS@eol

where SUCCESSFUL = 'Y'

and orig_system_group is not null

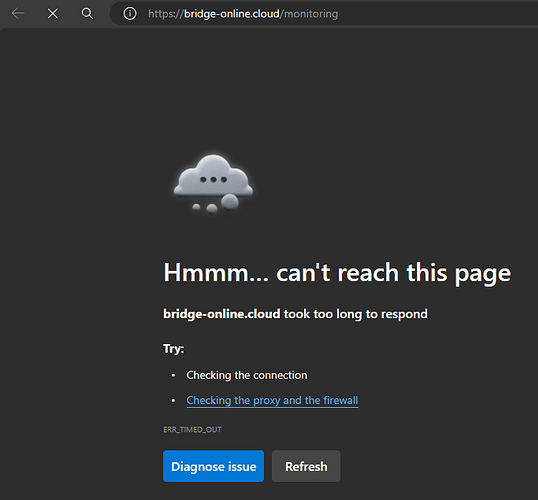

Tests on Bridge Online (OData4)

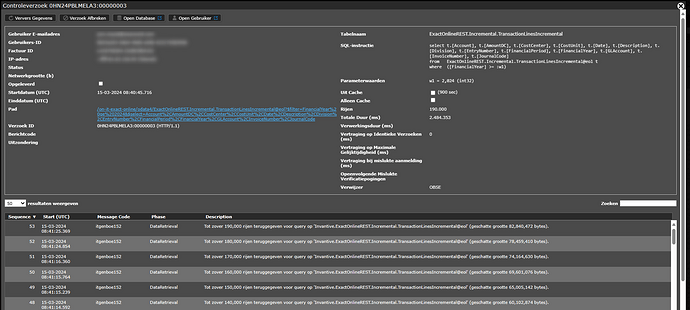

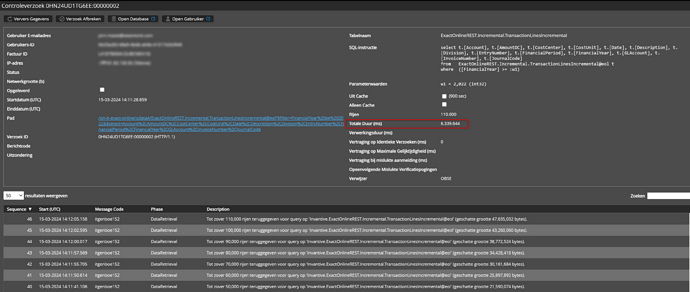

The initial download of https://bridge-online.cloud/acme-exact-online/odata4/ExactOnlineREST.Incremental.TransactionLinesIncremental@eol on Bridge Online was 2,4 GB and completed after 1542 seconds using curl as download tool as described in How do I use curl to execute an Invantive Cloud OData4 request?.

However, a redownload took 48 minutes and wasn’t finished then.

After this (due to forgetting to request compressed downloads), all downloads ended with itgenboe403 on Fair Use.

Switched to using --compressed using curl. Download is now 244 seconds for an update and 19 seconds when from cache.

The failure of the redownload of 48 minutes seems to resemble the problem experienced. The download will be rerun for some time to see whether a stall can be triggered.

Questions

Can you please double check the output of Get My Report whether the 2.882.846 rows are for TransactionLines?